source:

Boosting AI with neuromorphic computing

Nat Comput Sci 5, 1–2 (2025). https://doi.org/10.1038/s43588-025-00770-4

Neuromorphic computing has the potential to greatly improve the power efficiency, performance, application of AI on the edge.

Types of Artificial intelligence (AI)¶

Large Language Models (LLMs): $\rightarrow$ general human-like conversational agents, such as ChatGPT,

Expert Domain-Specific Tools:

- NYUTron (all-purpose clinical prediction engine ),

- ChemCrow (Used in organic synthesis, drug discovery, and materials design).

Reasoning AI Models: OpenAI o1 and o3-mini.

Challenge with current AI is in Training and Implementation of large-scale models. Requires state-of-the-art digital processors => computing speed at a high energy cost

limitation arises from the design of conventional digital processors. A phenomenon known as the von Neumann bottleneck, which stems from separating (physically) memory and computing processor.

The von Neumann bottleneck highlights the need for a balanced system design where memory speed, bus bandwidth, and processing power are optimized together

Fundamental to the von Neumann architecture in classical computing, the central processing unit (CPU) and memory are physically separated and communicate through a single data path or bus. Because data and instructions are transferred sequentially over this single bus, the system experiences:

- Limited Bandwidth $\Rightarrow$ The data transfer rate is constrained by the bus speed rather than the CPU’s processing capability.

- Instructions and Data Fetching $\Rightarrow $ In a von Neumann architecture, instructions and data share the same memory and communication channel, leading to conflicts and delays—especially in modern applications requiring large datasets or parallel processing.

Mitigation Strategies:

- Caching: Small, fast memory stores frequently accessed data closer to the CPU

- Parallel Processing: Using multiple cores or processors to distribute the workload

- Harvard Architecture: Separating data and instruction memory to avoid contention

- Increased Bus Bandwidth: Faster or wider buses to move more data simultaneously

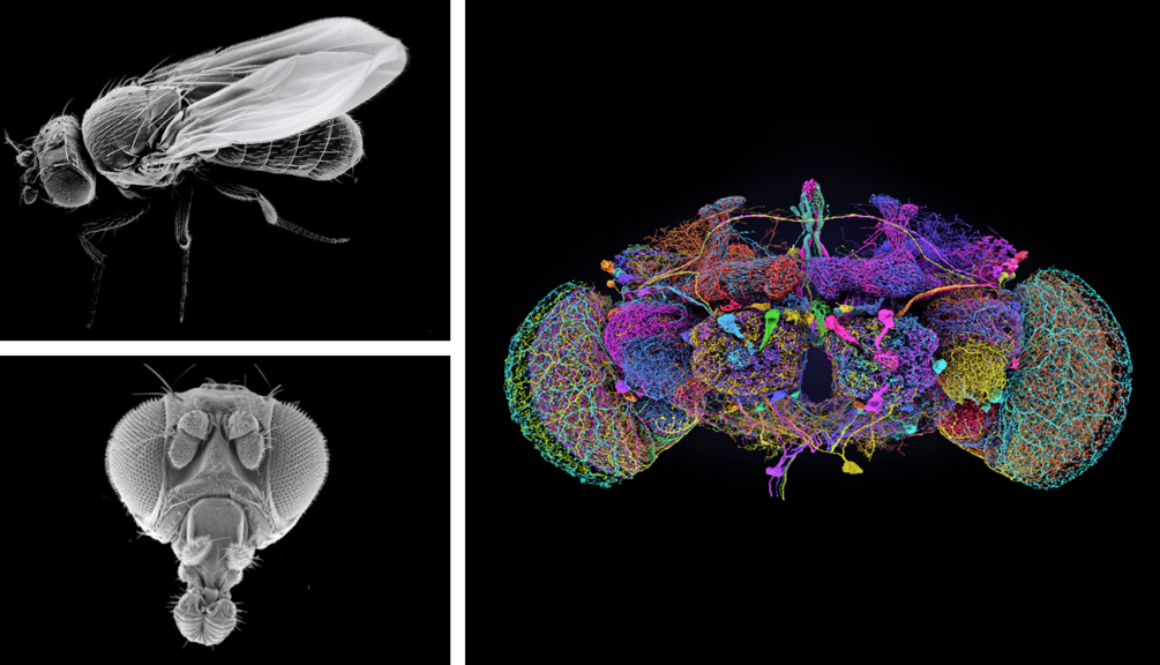

Neuromorphic computers perform computations by mimicking the structure and function of neurons and synapses in the brain

One solution is to redesign computing architecture using Spiking Neural Networks (SNNs) with memristors as neurons. With neuromorphic computing, information processing and memory are collocated and integrated within the SNN, eliminating the energy-costly memory movement step inherent in von Neumann computing architecture.

Challenge with Neuromorphic Computing¶

From October 2024 2nd Nature Conference on Neuromorphic Computing, focusing on the transformative power of neuromorphic computing in advancing AI

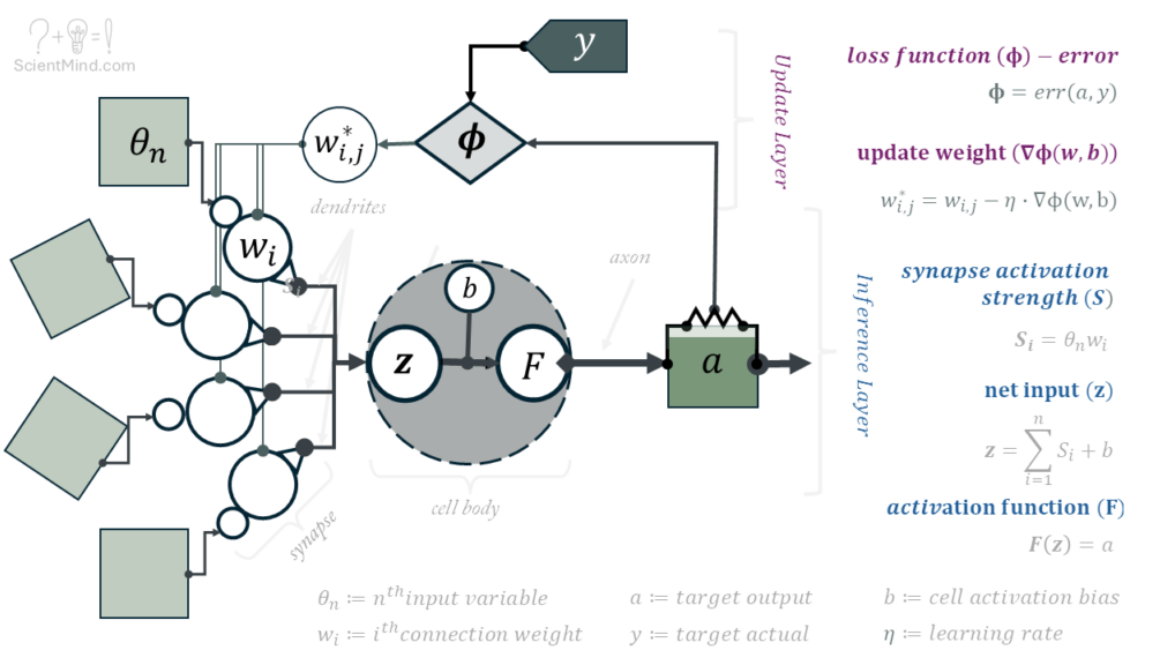

Memristors can mimic the human brain’s energy-efficient synapses and neurons, a concept known as in-memory computing (IMC). $\rightarrow$ IMC leverages local memory devices to perform computations, avoiding the energy-intensive step of moving data around.

IMC enables the deployment of AI tasks on local devices—“AI on the edge”—for applications such as:

- Autonomous driving

- Clinical diagnostics

From Lin, Y., Gao, B., Tang, J. et al. Deep Bayesian active learning using in-memory computing hardware. Nat Comput Sci (2024). https://doi.org/10.1038/s43588-024-00744-y

The authors demonstrate the implementation of Deep Bayesian active learning within the IMC framework using memristor arrays to eliminate extensive data movement during vector–matrix multiplication (VMM).

Additionally, they utilize the intrinsic randomness properties of memristors to efficiently generate random numbers for weight updates during the training of probabilistic AI algorithms. → Reducing time latency and power consumption.

importance of IMC can be further exemplified by

Büchel, J., Vasilopoulos, A., Simon, W.A. et al. Efficient scaling of large language models with mixture of experts and 3D analog in-memory computing. Nat Comput Sci (2025). https://doi.org/10.1038/s43588-024-00753-x

The authors propose a three-dimensional (3D) construction of non-volatile memory (NVM) devices that simultaneously meets memory requirements and addresses the parameter-fetching bottleneck in large LLMs while reducing energy costs. They employed a conditional computing model designed to minimize inference costs and training resources.

Despite the fact that conditional computing on digital processors is notoriously impractical for large-scale models,

authors demonstrated that mapping the conditional computing mechanism onto a 3D IMC architecture can be a promising approach to scale up large models

With the increasing availability of neuromorphic processors, identifying practical applications has become more important than ever. Potential applications include healthcare diagnostics, visual adaptation, and signal processing.

Additionally, the concept of hardware–software co-design (or >algorithm-guided hardware design) is considered essential for fully realizing the benefits of neuromorphic processors across various applications.

At the conference, two key issues were broadly discussed:

Lack of community-acknowledged benchmark datasets $\rightarrow$ Without standardized benchmarks, accurately measuring new technological advancements is challenging. NeuroBench framework aims to address this gap.

Absence of best practices for code sharing $\rightarrow$ Neuromorphic-related source code is often highly dependent on the underlying hardware, making reproducibility and collaboration difficult.

Establishing standardized practices and infrastructures for sharing code and data—while accounting for hardware differences—will be essential for the continued success of neuromorphic computing.